Our expertise spans across data engineering, analytics, data science, Gen AI and ML driven initiatives, allowing businesses to tap into the full potential of the Databricks platform.

Our Databricks Solutions

Custom migrations from existing platforms to Databricks

- Comprehensive and end-to-end migrations from AWS, GCP, Snowflake and other platforms

- Seamlessly integrate diverse data sources to build an organization wide & common data model

- Design and implement efficient ETL processes to ensure clean, reliable data

Centralized Governance & Security using Unity Catalog

- Centralize & strengthen data security & governance. Make it unified, and open

- Build granular level governance on different schemas, tables, dashboards and AI assets - all using a single framework

- Monitor, diagnose and optimize data spends and security with AI enforced auditing, and lineage

Development and production deployment of Gen AI and LLM solutions

- Quickly create advanced generative models with Databricks' AI capabilities and Introduce Gen AI features in your technology stack

- Leverage LLM features, trained on your own data. Fine-tune LLMs for specific data sets and domains.

- Utilize RAG technique for developing chat interfaces with custom data

Machine Learning and Advanced analytics

- Utilize Databricks' MLflow and other machine learning tools to build predictive and prescriptive models

- Convert unstructured data into structured output

- Ensure real-time data processing capabilities to meet the demands of dynamic business environments

Driving success for your business, every step of the way!

- Centralize and unify your data, governance and security

- Build fast, secure and consistent data pipelines to streamline data management for your business

- Make your data work for your business. Make informed decisions through descriptive and predictive analytics

- Democratize your data and empower your team with easy access to information

- Automate the conversion of unstructured data into organized, usable output

- Leverage advanced GenAI and LLM features to enhance productivity, efficiency and get a competitive edge for your business

Success Stories

Databricks implementation for a large Insurance player

- Deployed DataPlane on AWS and established connection with control plane through private link

- Set up governance and security for Metastore and data assets across workspaces

- Enriched commercial insurance demographic data elements using Ennabl

- Ingested real-time data from MSSQL using CDC Fivetran

- Built dynamic workflows with built-in data quality expectations for different entities

- Created an abstraction layer for commercial insurance with business logic and data models, and applied user and data access controls

- Enabled data sharing with external teams and subsidiaries of BRP, and across internal teams

- Deployed DLT and Databricks workflows using GitAction and asset bundles, and created deployment workflows for different environments

- Built the Databricks infrastructure as code using terraform

- Used SCIM to automatically sync users and groups from Azure AD to Databricks Account.

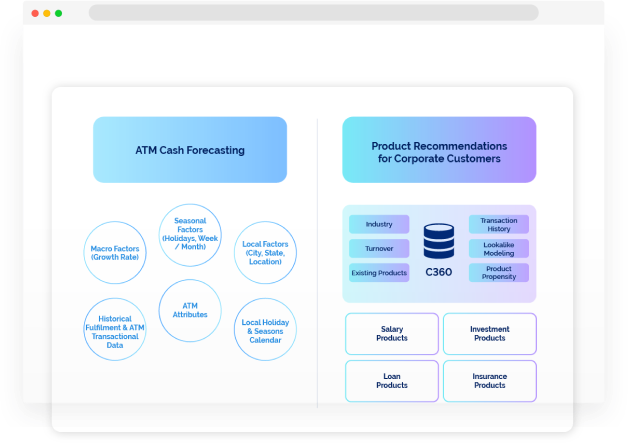

Optimizing Cashflow and Driving Growth for a European Bank on Databricks

Migration from GCP to Databricks for Data Analysis and Reporting

Unlocking Enclosed Data: Transforming Commercial Insurance Processes with Intelligent Document Processing

When faced with the challenge of managing and analyzing the ever-growing amount of Enclosed Data within physical and digital archives, a team took on the task of processing 18 million insurance documents from various carriers. Our case study details the efforts in accurately classifying carriers, detecting document types, and extracting crucial information for efficient insurance claim processing.

Download Case StudyWhitepapers

Databricks: Key Capabilities of a Modern & Open Data Platform

Today, the success of organizations depends on their data teams' ability to effectively drive growth. But managing diverse teams and data architectures can be a challenge. Discover how the Databricks platform empowers modern data teams with self-service capabilities, flexibility, speed, and quality while maintaining proper governance.

Download Whitepaper