The author is a Senior Data Consultant in Eucloid. For any queries, reach out to us at: contact@eucloid.com

The Move to Databricks: How This Software Company Saved 30% on Data Analysis Costs

It's no secret that data analysis and reporting are crucial elements for any organization looking to make informed business decisions. The use of data analysis tools has become essential in deriving valuable insights from large volumes of data generated by businesses daily. However, with the continuous growth of data volumes, businesses face the challenge of finding a cost-effective and high-performing platform that can handle their data analysis and reporting requirements.

2500 Complex Data Models, Endless Data

With approximately 2500 data models performing different sets of data analysis activities, the client was facing mounting costs and performance issues. To address this, the team undertook a migration process, transferring over 2,000 tables from BigQuery to Databricks. In total, 50 TB+ of data was successfully migrated, resulting in improved cost efficiency and overall performance.

One of the main challenges that the client needed to address with Google Cloud Platform was the cost scalability. The client had large and unpredictable data workloads resulting in high costs for using the GCP tool. Moreover, GCP's pricing model, which is based on hourly usage and tiers, made it difficult for the client to accurately estimate their expenses. This was resulting in budget constraints and was hindering their ability to fully utilize the platform's capabilities.

This led them to consider migrating to Databricks, a cloud-based analytics platform known for its cost optimization and performance improvement capabilities.

While the decision to migrate was based on the considerations above, the migration process too posed a significant obstacle for the client due to the nature of their data and the complexity of their models. With such a vast and expansive volume of crucial data, any errors or delays in the migration process could have severe consequences for the client's business operations and decision-making. Additionally, the differences in querying languages and techniques used by GCP and Databricks added another layer of complexity, requiring thorough translation and testing to ensure accuracy and compatibility. A successful and seamless transition to Databricks thus required careful planning, strategy, and thorough testing.

.png)

The Right Fit: Why Databricks Proved to be the Ideal Solution

Databricks was undoubtedly a better-suited platform for data analysis and reporting requirements. Firstly, Databricks follows a pay-as-you-go model, allowing the client to only pay for the resources and services they use. This makes it more cost-effective, especially for businesses with large or fluctuating data workloads. They could easily scale up or down their usage based on their specific needs, without the worry of being tied into a fixed contract or deal.

Databricks also runs on a serverless architecture, freeing the client from the hassle of managing servers. This not only saves time and resources but also enables them to scale their data warehousing needs without any additional infrastructure costs. Furthermore, with up to 12x better price/performance than GCP, Databricks provided the client with better value for their money.

Additionally, with self-service tools and collaborative features, Databricks proved to be a more user-friendly and efficient platform for data teams. Its integration with open-source technologies such as MLflow and Apache Spark™ also provided the client with the flexibility to build advanced analytics and AI/ML capabilities quickly.

A Breakdown of Our Migration Approach

With over 25 resources involved in the migration process, we were able to complete the complex migration within three months. The project was divided into three different workstreams.

(1).png)

1. Data Ingestion to Databricks:

We developed a comprehensive tool for data-ingestion, an Autoloader on top of Databricks, to copy native BQ source tables and ingest data that was present on S3 data lake. This solution enables us to easily transfer data from any OLTP system to OLAP environment. Moreover, it can seamlessly ingest data from a wide variety of data sources including databases such as MySQL, Postgres, MongoDB, and from APIs. It has multiple configurations for ingestion, allowing us to set the frequency and other parameters according to our needs.

One of the key features of our autoloader is its ability to capture changes in both schema and data continuously through CDC (Change Data Capture). Our tooling also offers extensive reconciliation capabilities, enabling us to compare and validate data between source and destination. With pre-built dashboards, we can easily monitor data ingestion volume and quality, helping us to identify any discrepancies and ensure data accuracy.

This approach enabled us to incrementally and efficiently process new data files as they arrived in cloud storage, without any additional setup. We were able to ingest various file formats such as JSON, CSV, PARQUET, and TEXT and load them into Databricks.

Moreover, historical data loading was done directly into the Databricks production region, since the volume of data was immense and required a dedicated approach.

2. Data Transformation:

Data transformation was a crucial step in the migration process. We used the data build tool (DBT) on Databricks to perform the necessary transformations. DBx workspaces were created, and DBT was integrated into the platform for better efficiency. We migrated over 800 dbt models, designed to transform raw data into actionable insights for business decision making. Daily/incremental feeds were carried out in the development environment, and after unit testing, DBT models were promoted to the user acceptance testing (UAT) region for complete data matching and further testing.

These dbt models were extensive, with some exceeding 1000 lines of code. They were organized into 15 distinct workflows, each with complex lineage graphs. These workflows ran at varying frequencies, including daily and weekly, to ensure the timely transformation of data.

3. Looker Dashboards:

To generate business reports, we used Looker, a business intelligence and data visualization tool. A Looker environment was created and connected with Databricks in the backend. One of the main challenges for this workstream was converting 1500+ Looker queries from GCP to Databricks. To mitigate this challenge, we partially automated the process using Python and manually checked and verified the queries before promoting them to the UAT region for quality assurance.

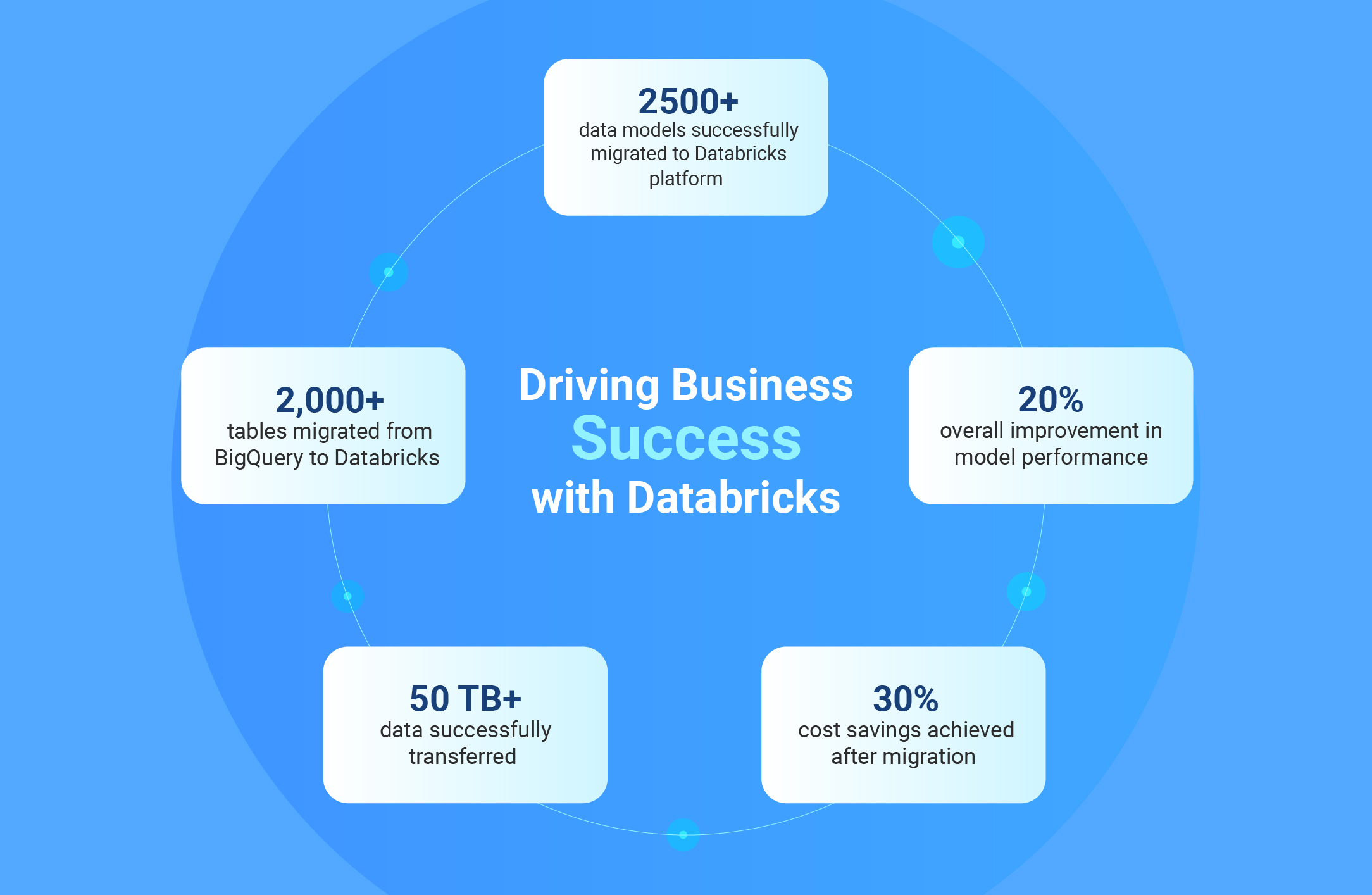

Driving Business Success with Databricks

The project's most significant success was the successful migration of all 2500 models to Databricks platform. This enabled the client to continue their data analysis and reporting activities without any disruptions or delays.

The overall performance of the data models improved by approximately 20%, resulting in faster processing times. This enabled the client to generate their business reports in a shorter turnaround time.

A 30% reduction in cost was also achieved, thanks to Databricks' serverless architecture and pay-as-you-go model. By eliminating the need to manage servers and providing flexible pricing options, Databricks enabled the client to optimize their costs and utilize their resources more efficiently.

The migration to Databricks also simplified the client's data platform, providing them with a single, modern platform for all their data-analytics, business reporting, Machine learning and AI use cases. This improved their governance processes and provided a unified user experience across clouds and data teams.

With Databricks as their new analytics platform, our client is now better equipped to make data-driven decisions and drive their business towards long-term success.

Want to find out how Databricks can transform your data analysis capabilities? Reach out to us at databricks@eucloid.com for a consultation.

Posted on : August 20, 2024

Category : Data Engineering

About the Authors